By Tom McCoy

It is recommended that you view this demo on a full-screen desktop browser window, wide enough to display the grid of pictures and the instructions side-by-side.

Meaning |

Prior |

Count |

Likelihood |

Prior*Lik |

Posterior |

|---|---|---|---|---|---|

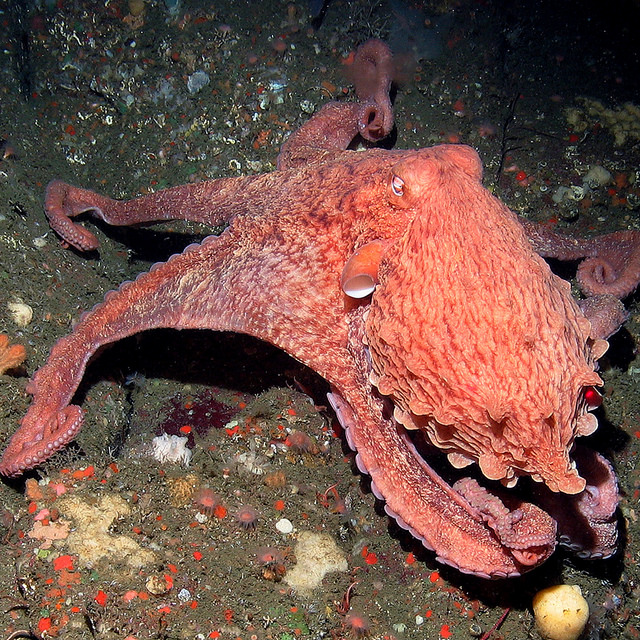

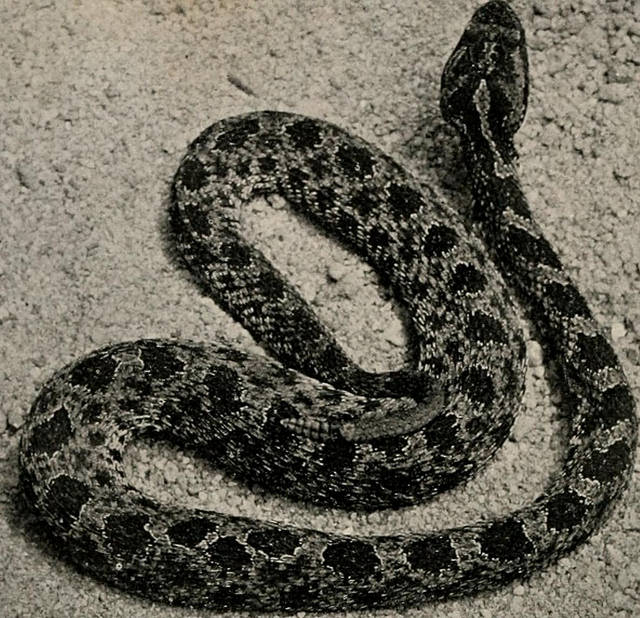

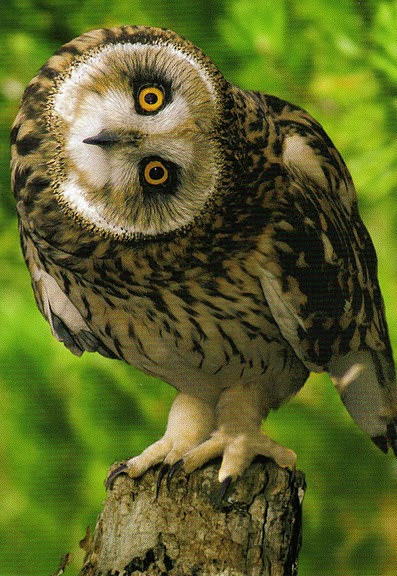

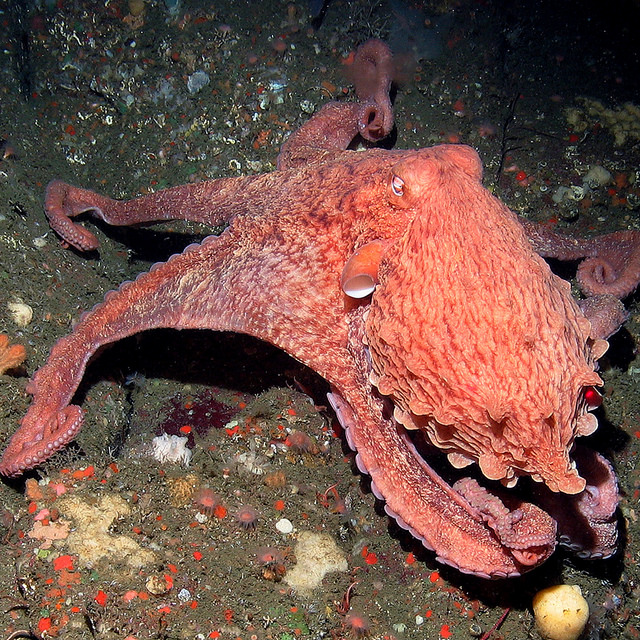

Let's take an example. Suppose you heard someone utter the word "ghoti" to refer to the following entity:

Given just this information, there are several options for what this word could mean, including "animal", "vertebrate", "fish", "clownfish", and "this specific clownfish." Although people do learn some words from direct instruction (e.g. by being taught them in vocabulary classes or looking them up in dictionaries), most word meanings are acquired unconsciously. So how does this unconscious acquisition process happen when there are so many alternatives to consider?

Xu and Tenenbaum's answer is that this process combines prior knowledge about likely word meanings with the evidence the learner observes. For example, one type of prior belief that people seem to have is called a "basic-level bias." That is, all else being equal, people will assume that a new word refers to some "basic" level concept (such as "clownfish") rather than an overly specific "subordinate" level concept (such as "this specific clownfish") or an exceedingly abstract "superordinate" level concept (such as "vertebrate").

The available evidence is also important. Suppose that you now hear the word "ghoti" a second time, still referring to the exact same entity:

The word still could mean any of the five concepts from before ("animal", "vertebrate", "fish", "clownfish", or "this specific clownfish.") However, it's a bit suspicious that both times that you've heard this word have been referring to the same specific clownfish, while you've never heard it refer to anything else. Therefore, you're now probably more likely to think "ghoti" might be the name of this specific fish, given this suspicious coincidence.

However, suppose you now hear the word a third time and that now it refers to this tuna:

Now you know for sure that "ghoti" cannot mean "clownfish" or "this specific clownfish" because those meanings would contradict the available evidence. The remaining options are "fish", "vertebrate", and "animal". Even though all these are possible, it is a suspicious coincidence that you've only heard the word refer to fish and not to other vertebrates or other animals. Therefore, at this point, you'll tentatively conclude that "ghoti" means "fish."

Bayesian inference unites the two types of reasoning discussed above. Our proposed meaning of the word is our hypothesis, and the evidence we have is our observations about the words usage. Using Bayes' Theorem, we can then write:

p(ghoti = "fish" | evidence) ∝ p(ghoti = "fish") * p(evidence | ghoti = "fish")

The prior term, p(ghoti = "fish"), captures any prior beliefs the learner might have about word meanings. The likelihood term, p(evidence | ghoti = "fish"), captures our intuitions about how plausible our observations would be under a given meaning for "ghoti." Together they are used to compute the posterior probability p(ghoti = "fish" | evidence), which represents how probable it is that "ghoti" means "fish" in the face of our evidence. (Recall that the symbol ∝ means "is proportional to." It is not a ghoti, even though it looks like one.)

For the simulations here, we will build from the basic level bias to assume that the prior probability of a word meaning is determined entirely by that meaning's position in the taxonomic tree, with basic level concepts ("clownfish", "horse", "spider", "tortoise") having the highest prior probability, immediately subordinate ("clownfish 1", "wolf 7", "raven 4") and immediately superordinate ("fish", "mammal", "mollusc") categories having lower prior probabilities, and more distantly superordinate categories ("vertebrate", "animal") having even lower prior probabilities.

To compute the likelihood, we can use the basic probability theorem that p(A and B | C) = p(A | C) * p(B | C) (assuming A and B are independent). In our case, the likelihood of a sequence of observations would be something like p(["ghoti" is used to refer to clownfish 5] and ["ghoti is used to refer to clownfish 5"] and ["ghoti is used to refer to tuna 1"] | "ghoti" means "fish"), and using this theorem we can rewrite it as p("ghoti" is used to refer to clownfish 5 | "ghoti" means "fish")*p("ghoti" is used to refer to clownfish 5 | ghoti means fish)*p("ghoti" is used to refer to tuna 1 | ghoti means fish).

These separate probabilities can now be computed very simply. Consider p("ghoti" is used to refer to clownfish 5 | ghoti means fish). Supposing that ghoti means fish (which is what a conditional probability does), then we should be equally likely to hear "ghoti" used to refer to any of the fish in our world. Since there are 9 fish in the scenario, this means that p("ghoti" is used to refer to clownfish 5 | ghoti means fish) = 1/9. The same argument gives the same value for p("ghoti" is used to refer to tuna 1 | ghoti means fish), giving us the value of (1/9)^3 = 1/729 as our final likelihood.

To figure out which meaning the learner settles on, we simply iterate over all five meanings, find the posterior probability of each one, and choose the one with the highest posterior probability.