I am a postdoctoral researcher in the Department of Computer Science at Princeton University, advised by Tom Griffiths. Starting in January 2024, I will be an Assistant Professor in the Department of Linguistics at Yale University. Before coming to Princeton, I received a Ph.D. in Cognitive Science at Johns Hopkins University, co-advised by Tal Linzen and Paul Smolensky, and before that I received a B.A. in Linguistics at Yale University, advised by Robert Frank.

Research

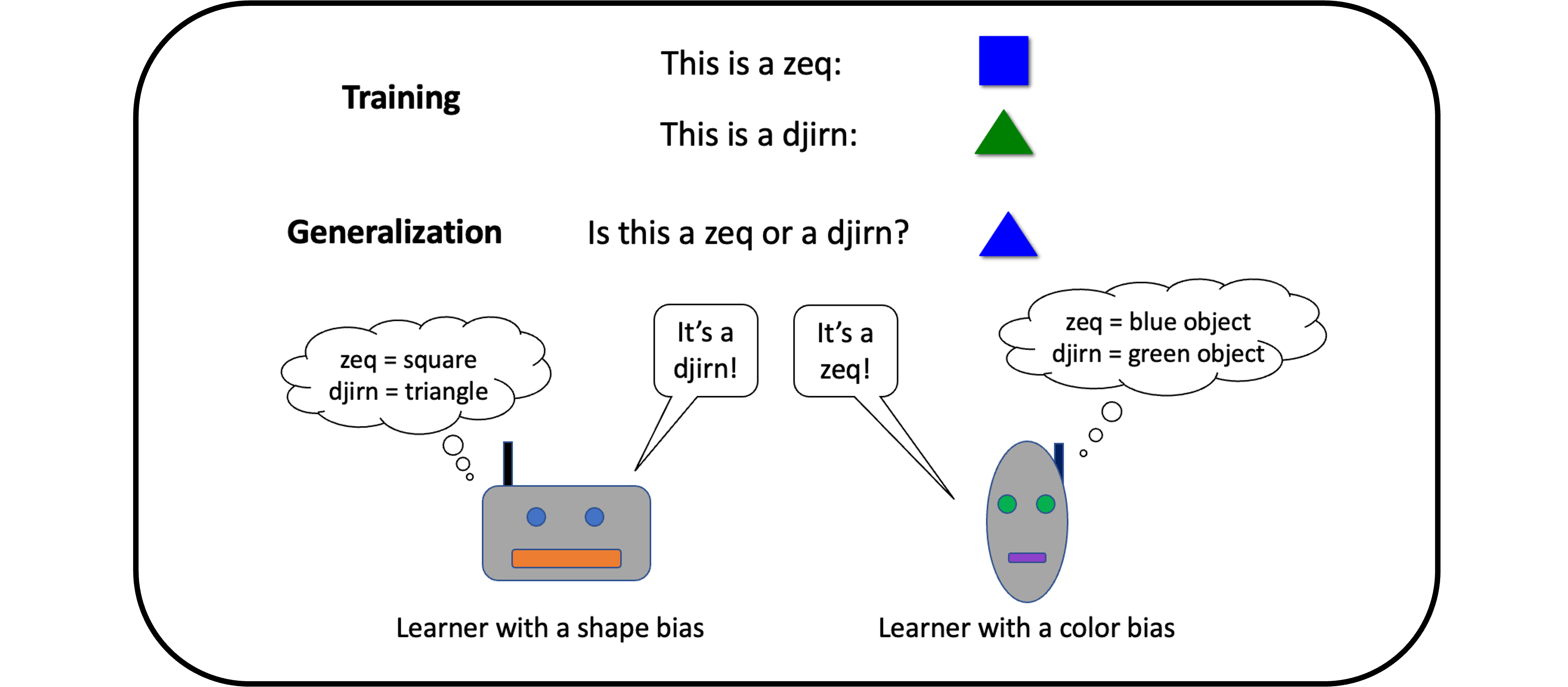

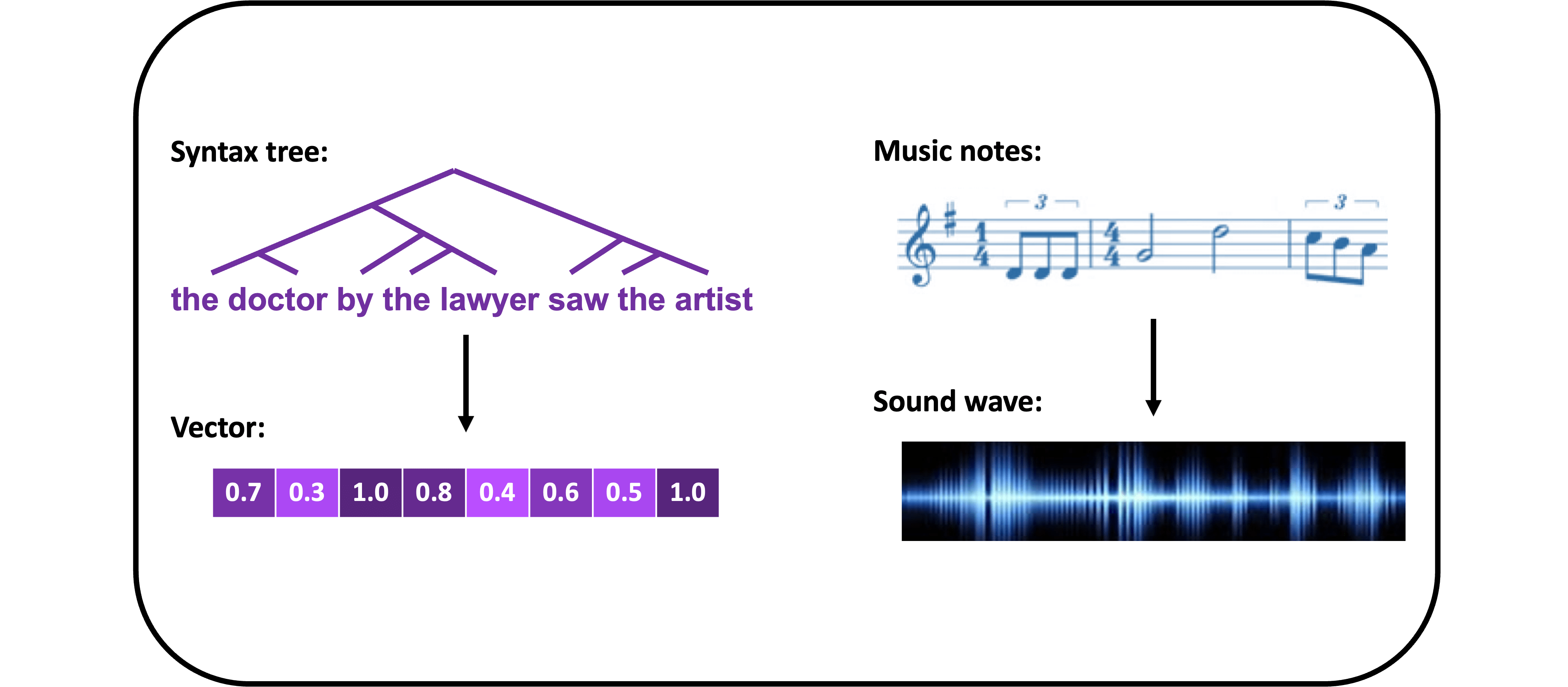

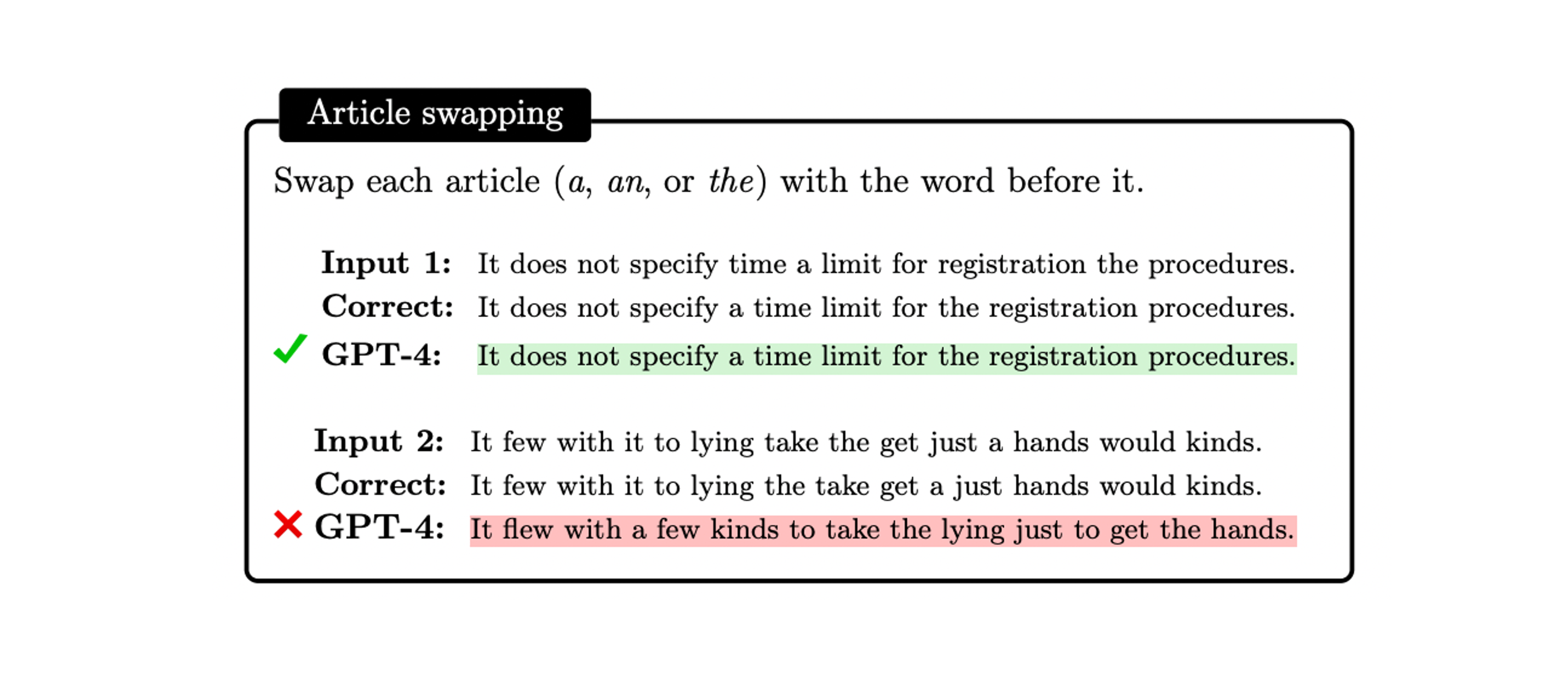

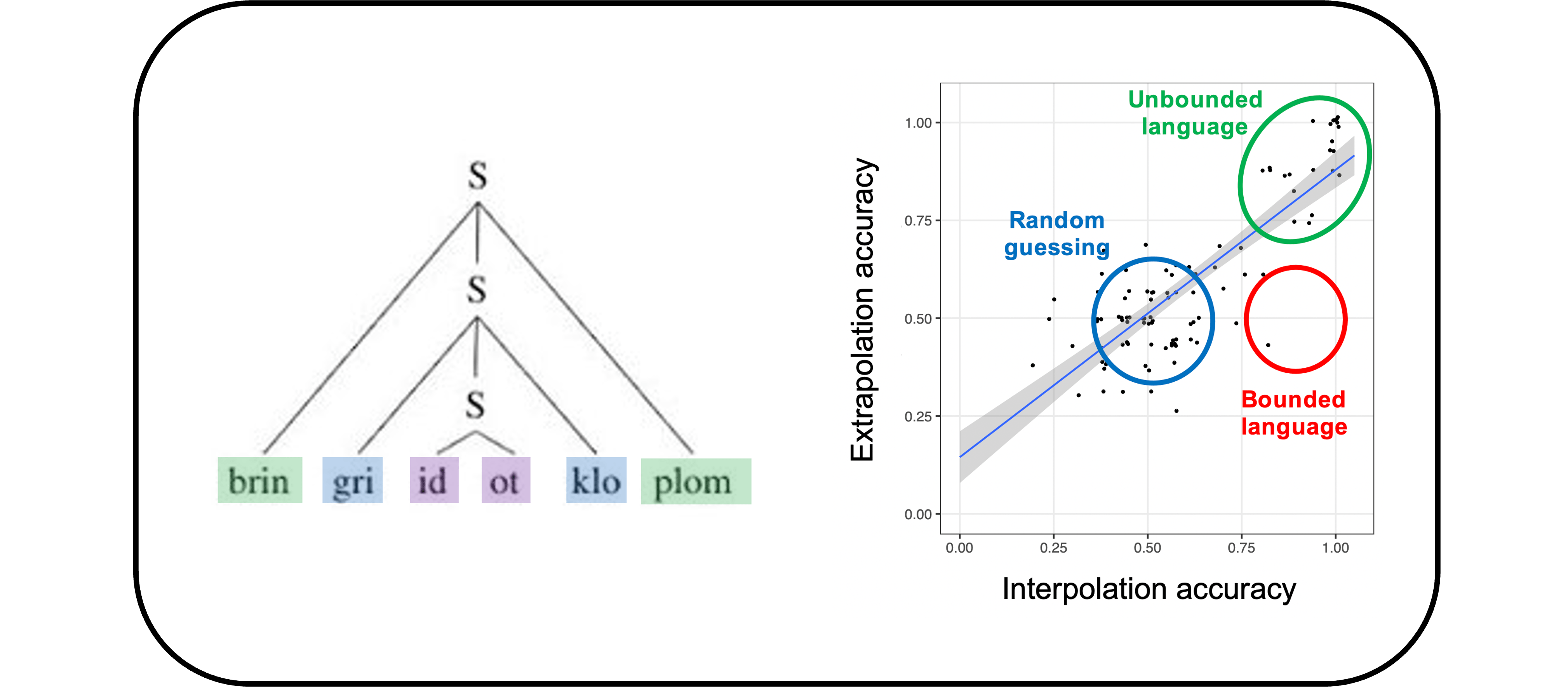

I study computational linguistics using techniques from cognitive science, machine learning, and natural language processing. My research focuses on understanding the computational principles that underlie human language. I am particularly interested in language learning and linguistic representations. On the side of learning, I investigate how people can acquire language from so little data and how we can replicate this ability in machines. On the side of representations, I investigate what types of machines can represent the structure of language and how they do it. Much of my research involves neural network language models, with an emphasis on connecting such systems to linguistics.

For a longer overview of my research, click here. If you want an even more detailed discussion, you can read this summary for a linguistics/cognitive science audience or this summary for a computer science audience, or check out my publications.

Prospective PhD students, postdocs, and undergraduate researchers

I will be considering PhD applications and postdoc applications for Fall 2024, through either the Yale Linguistics Department or the Yale Computer Science Department. See here for more information.

Conversation topics

Do you have a meeting scheduled with me but don’t know what to talk about? See this page for some topics that are often on my mind.

Representative papers

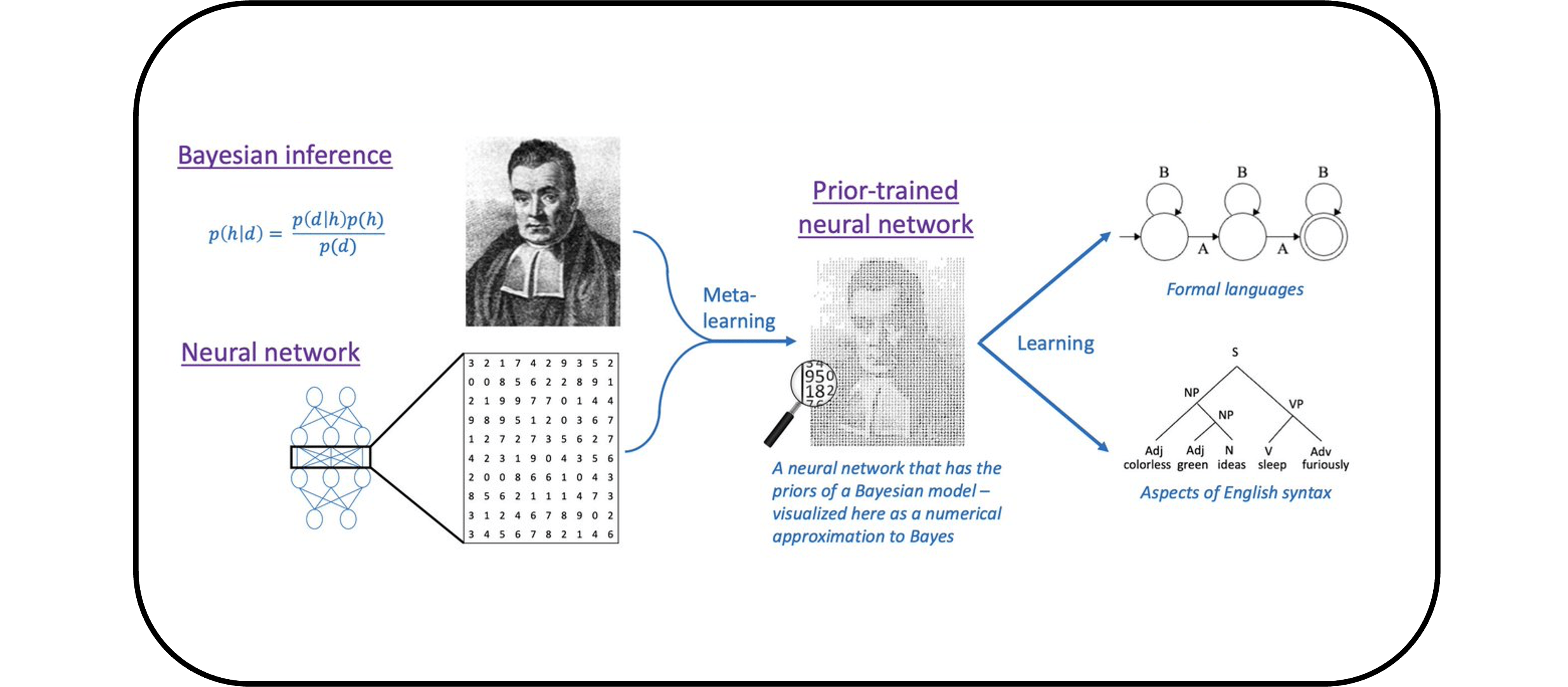

- R. Thomas McCoy and Thomas L. Griffiths. Modeling rapid language learning by distilling Bayesian priors into artificial neural networks. arXiv preprint arXiv 2305.14701. [pdf]

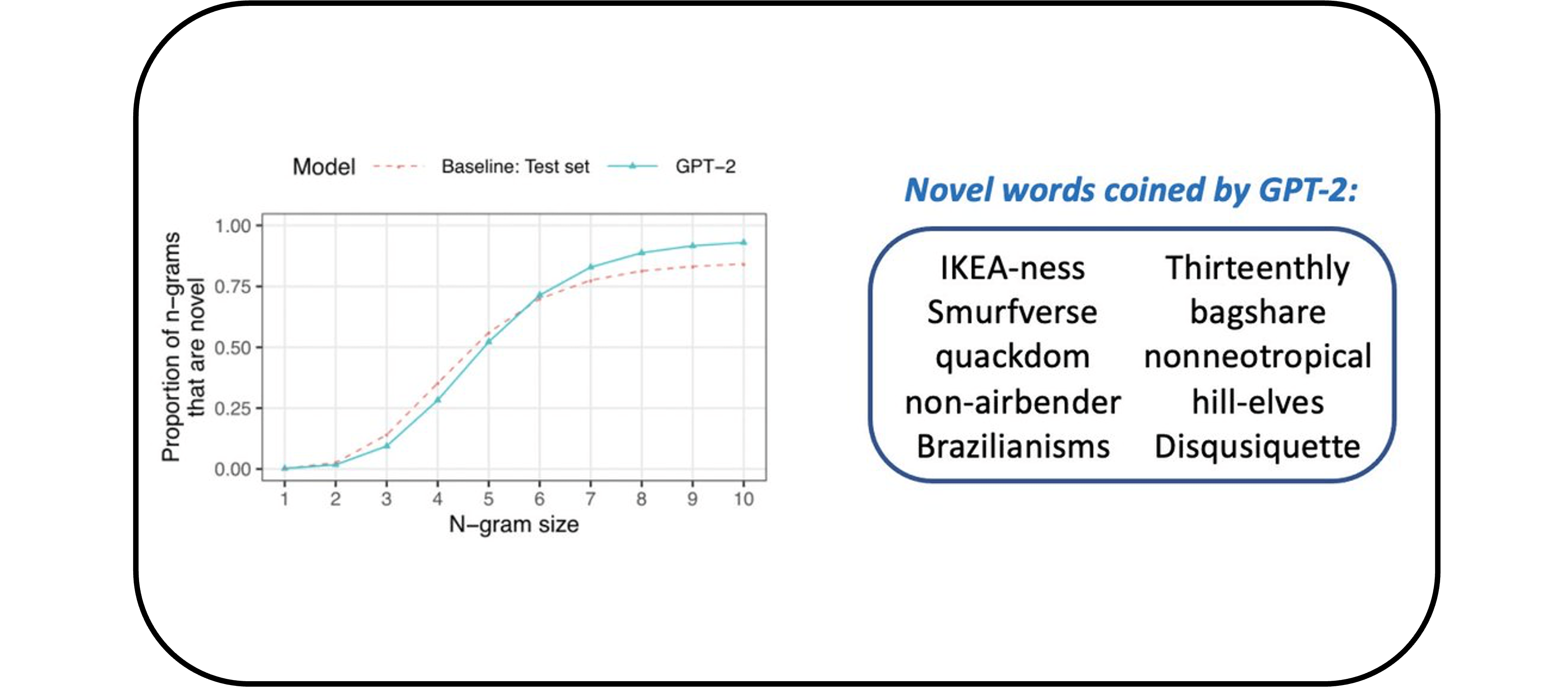

- R. Thomas McCoy, Shunyu Yao, Dan Friedman, Matthew Hardy, and Thomas L. Griffiths. Embers of Autoregression: Understanding Large Language Models Through the Problem They are Trained to Solve. arXiv preprint arXiv 2309.13638. [pdf]

- R. Thomas McCoy, Jennifer Culbertson, Paul Smolensky, and Géraldine Legendre. Infinite use of finite means? Evaluating the generalization of center embedding learned from an artificial grammar. Proceedings of the 43rd Annual Conference of the Cognitive Science Society. [pdf]

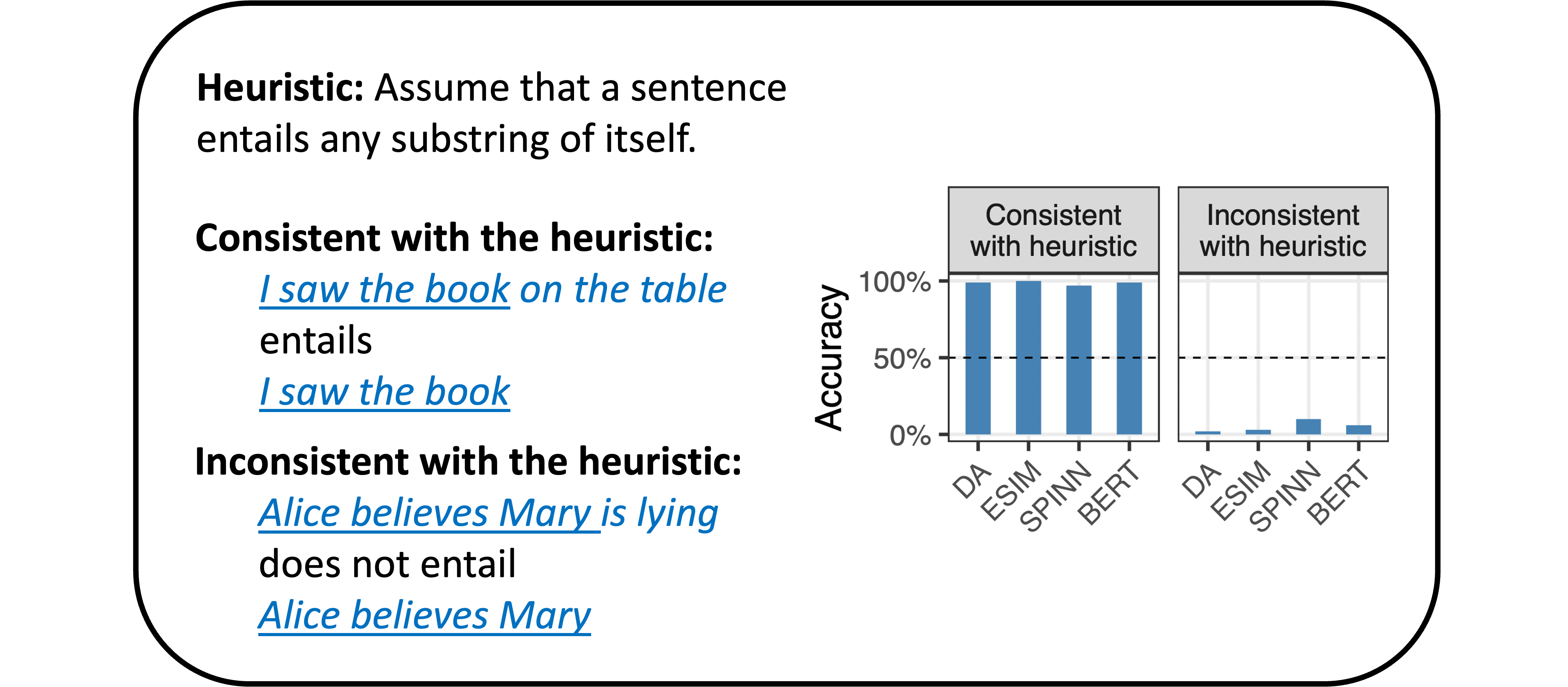

- R. Thomas McCoy, Ellie Pavlick, and Tal Linzen. Right for the Wrong Reasons: Diagnosing Syntactic Heuristics in Natural Language Inference. ACL 2019. [pdf]

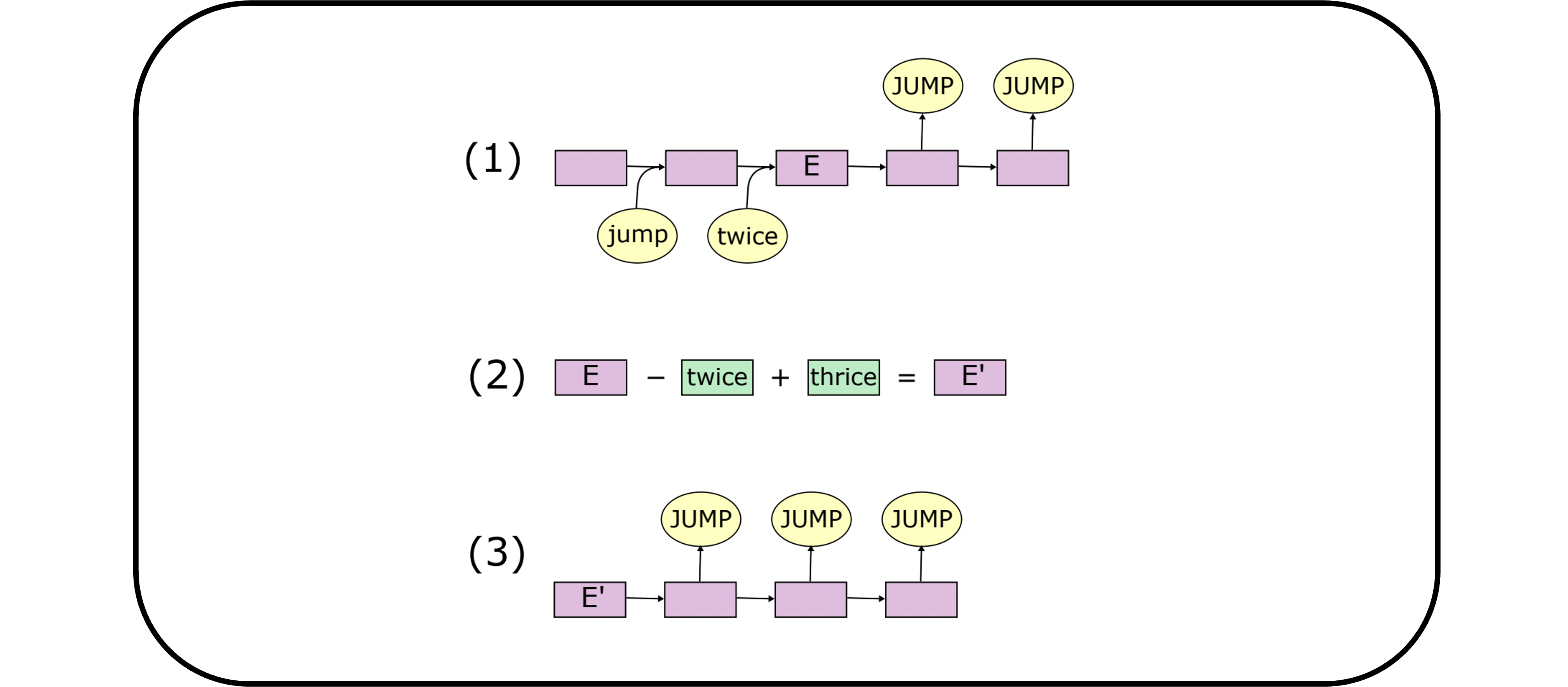

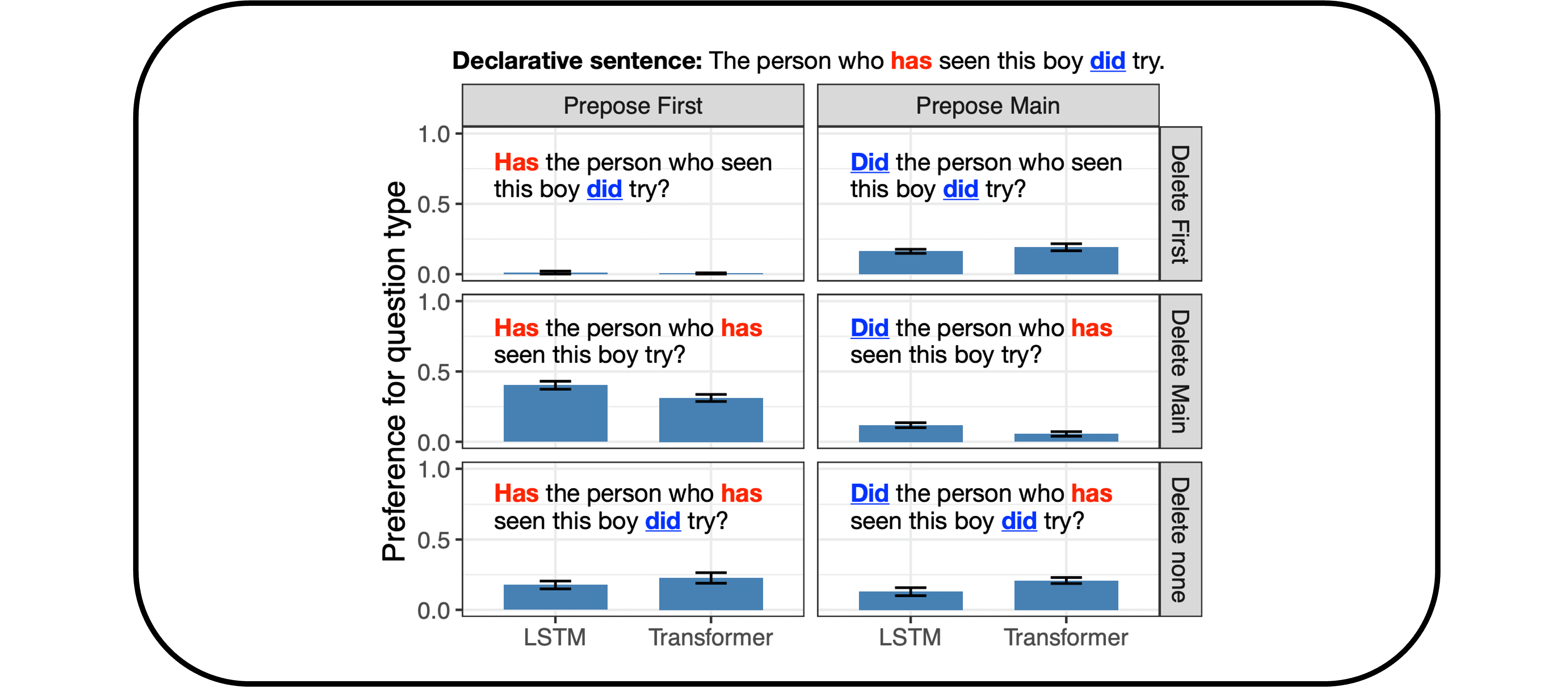

- R. Thomas McCoy, Robert Frank, and Tal Linzen. Does syntax need to grow on trees? Sources of hierarchical inductive bias in sequence-to-sequence networks. TACL. [pdf] [website]

- R. Thomas McCoy, Tal Linzen, Ewan Dunbar, and Paul Smolensky. RNNs implicitly implement Tensor Product Representations. ICLR 2019. [pdf] [demo]